NTT Research and Cornell Scientists Introduce Deep Physical Neural Networks

- January 31, 2022

- News

Article in Nature Explains the Application of Physics-Aware Training Algorithm and Shares Results of Tests on Three Physical Systems

Sunnyvale, Calif. – January 31, 2022 – NTT Research, Inc., a subsidiary of NTT (TYO:9432), and Cornell University today announced that scientists representing their organizations have introduced an algorithm that applies deep neural network training to controllable physical systems and have demonstrated its implementation on three types of unconventional hardware. The team released its findings in an article titled “Deep physical neural networks trained with backpropagation,” published on January 26 in Nature, one of the world’s most cited scientific journals. The paper’s co-lead authors are Logan Wright and Tatsuhiro Onodera. Both are research scientists at the NTT Research Physics and Informatics (PHI) Lab and NTT Research visiting scientists in the School of Applied and Engineering Physics at Cornell. The project’s leader, Peter McMahon, assistant professor of Applied and Engineering Physics at Cornell, was one of five other co-authors of this paper. The paper offers an approach to deep learning not constrained by existing energy requirements and other limits to scalability.

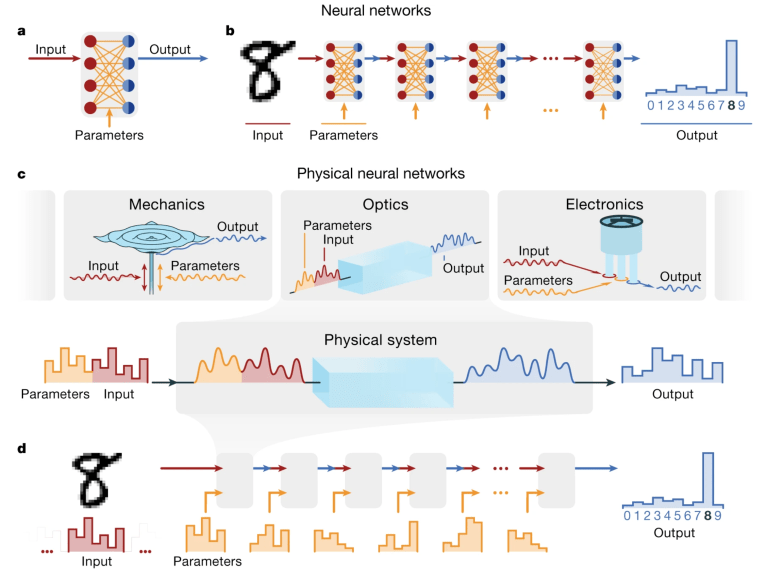

Deep learning, a subset of artificial intelligence (AI), uses neural networks that feature several layers of interconnected nodes. Deep neural networks are now pervasive in science and engineering. To train them to perform mathematical functions, such as image recognition, users rely upon a training method known as backpropagation (short for “back propagation of errors”). To date, this training algorithm has been implemented using digital electronics. The computational requirements of existing deep learning models, however, have grown rapidly and are now outpacing Moore’s Law, a longstanding observation regarding the miniaturization of integrated circuits over time. As a result, scientists have attempted to improve the energy efficiency and speed of deep neural networks. The NTT Research/Cornell team has taken the approach of applying backpropagation to unconventional hardware, namely: to optical, mechanical, and electrical systems.

“The backpropagation algorithm is a series of mathematical operations; there’s nothing intrinsically digital about it. It just so happens that it’s only ever been performed on digital electronic hardware,” Dr. Wright said. “What we’ve done here is find a way to take this mathematical recipe and translate it into a physical recipe.”

The team calls the trained systems physical neural networks (PNNs), to emphasize that their approach trains physical processes directly, in contrast to the traditional route in which mathematical functions are trained first, and a physical process is then designed to execute them. “This shortcut of training the physics directly may allow PNNs to learn physical algorithms that can automatically exploit the power of natural computation and makes it much easier to extract computational functionality from unconventional, but potentially powerful, physical substrates like nonlinear photonics,” Dr. Wright said.

In the Nature article, the authors describe the application of their new algorithm, which they call physics-aware training (PAT), to several controllable physical processes. They introduce PAT through an experiment that encoded simple sounds (test vowels) and various parameters into the spectrum of a laser pulse and then constructed a deep PNN, creating layers by taking the outputs of optical transformations as inputs to subsequent transformations. After being trained with PAT, the optical system classified test vowels with 93 percent accuracy. To demonstrate the approach’s universality, the authors trained three physical systems to perform a more difficult image-classification task. They used the optical system again, although this time demonstrating a hybrid (physical-digital) PNN. In addition, they set up electronic and mechanical PNNs for testing. The final accuracy was 97 percent, 93 percent, and 87 percent for the optics-based, electronic, and mechanical PNNs, respectively. Considering the simplicity of these systems, the authors consider these results auspicious. They forecast that, by using physical systems very different from conventional digital electronics, machine learning may be performed much faster and more energy-efficiently. Alternatively, these PNNs could act as functional machines, processing data outside the usual digital domain, with potential uses in robotics, smart sensors, nanoparticles, and elsewhere.

“This article identifies a powerful solution to the problem of power-hungry machine learning,” said PHI Lab Director Yoshihisa Yamamoto. “The research from Drs. Wright, Onodera, and colleagues combines physical systems and backpropagation in a way that is theoretically much more efficient than the status quo and applicable in a range of intriguing applications. One exciting task going forward is to explore further which physical systems are best for performing machine-learning calculations.”

The research in this article reflects the goals of the two labs represented by the co-authors. A large focus of the NTT Research PHI Lab is on the Coherent Ising Machine (CIM), an information processing platform based on photonics oscillator networks, which falls under the Lab’s larger mission of “rethinking the computer from the principles of critical phenomena in neural networks.” For its part, Professor McMahon’s Lab at Cornell, which is collaborating with the PHI Lab on CIM research, is committed to addressing the question of “how physical systems can be engineered to perform computation in new ways.” The four other co-authors of the Nature article are Martin Stein, Ph.D. candidate, and Tianyu Wang, Mong Postdoctoral Fellow, both members of the McMahon Lab; and Zoey Hu and Darren Schachter, both undergraduate student researchers at Cornell when this research was conducted in 2020 and 2021. Hu was also an NTT Research intern in the summer of 2021.

In addition to Cornell, nine other universities have agreed to conduct joint research with the NTT Research PHI Lab. These include the California Institute of Technology (CalTech), Harvard University, Massachusetts Institute of Technology (MIT), Notre Dame University, Stanford University, Swinburne University of Technology, the Tokyo Institute of Technology, the University of Michigan, and the University of Tokyo. The NASA Ames Research Center in Silicon Valley and 1QBit, a private quantum computing software company, have also entered joint research agreements with the PHI Lab.