Freely scalable and reconfigurable optical hardware for deep learning was published in Scientific Reports

- February 6, 2021

- Quantum and Nonlinear Optics

Title: Freely scalable and reconfigurable optical hardware for deep learning [Science Reports, arXiv, PDF]

Authors: Liane Bernstein, Alexander Sludds, Ryan Hamerly, Vivienne Sze, Joel Emer, Dirk Englund

Published on 4 February 2021.

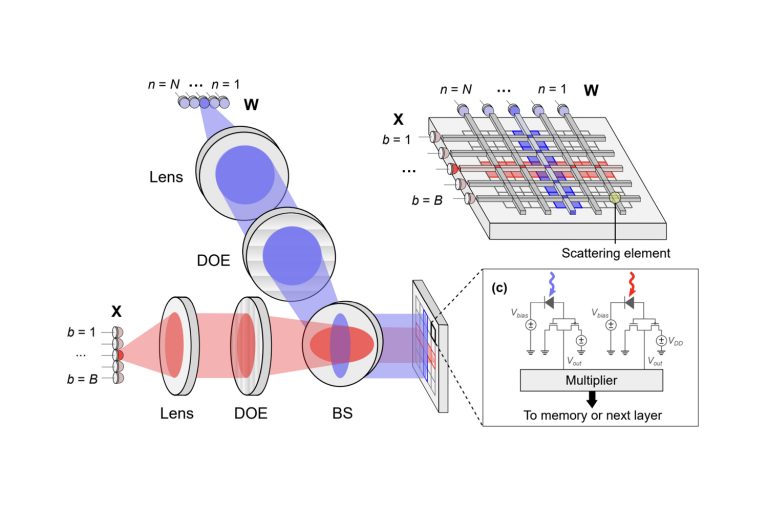

Abstract: As deep neural network (DNN) models grow ever-larger, they can achieve higher accuracy and solve more complex problems. This trend has been enabled by an increase in available compute power; however, efforts to continue to scale electronic processors are impeded by the costs of communication, thermal management, power delivery and clocking. To improve scalability, we propose a digital optical neural network (DONN) with intralayer optical interconnects and reconfigurable input values. The near path-length-independence of optical energy consumption enables information locality between a transmitter and arbitrarily arranged receivers, which allows greater flexibility in architecture design to circumvent scaling limitations. In a proof-of-concept experiment, we demonstrate optical multicast in the classification of 500 MNIST images with a 3-layer, fully-connected network. We also analyze the energy consumption of the DONN and find that optical data transfer is beneficial over electronics when the spacing of computational units is on the order of >10 micrometers.